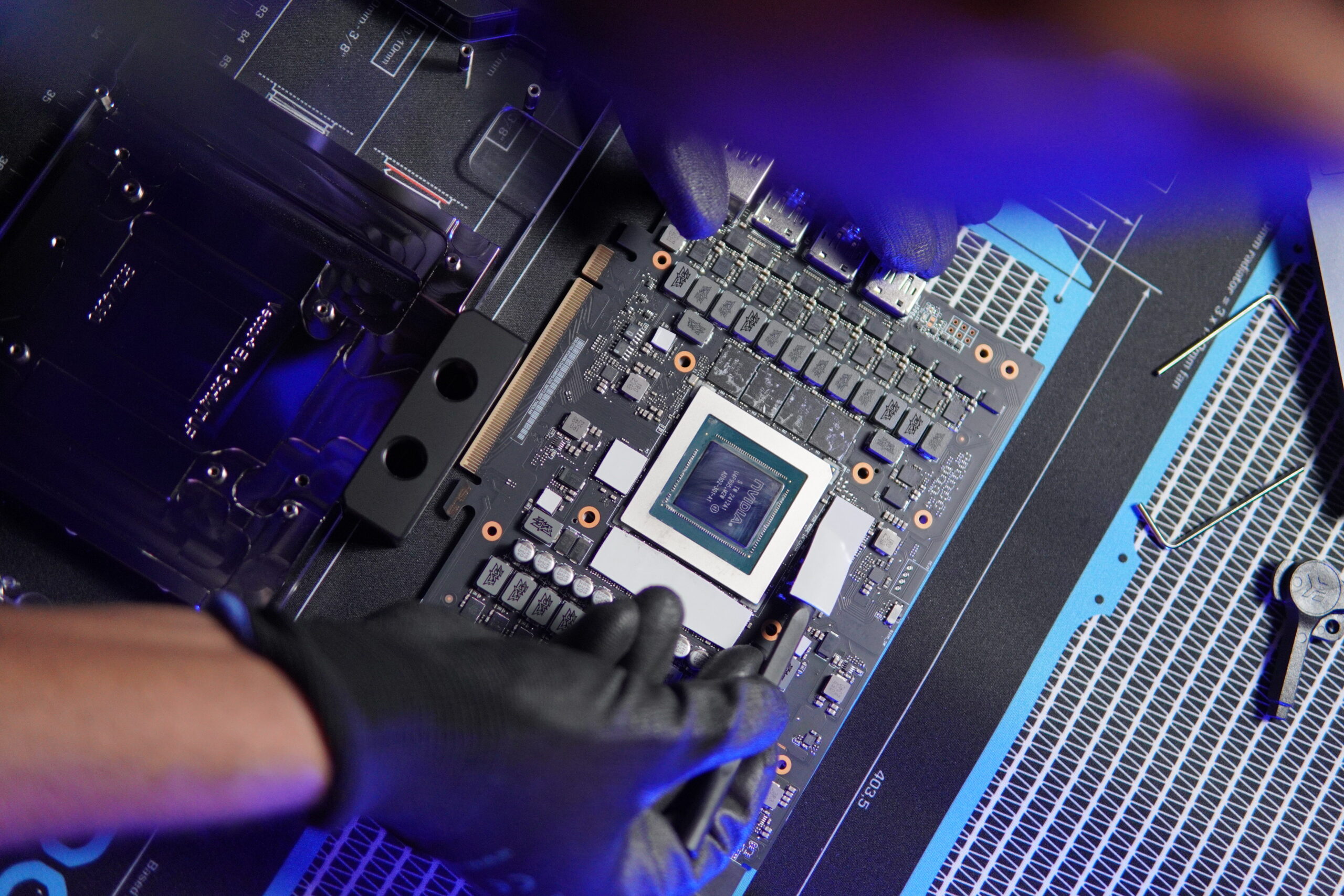

NVIDIA’s flagship Datacenter GPUs, such as the H100 are available in two primary form factors: SXM (Socketed Accelerated Module) and PCIe (Peripheral Component Interconnect Express), each tailored to meet specific performance requirements and infrastructure designs. The choice between these form factors depends on factors like scalability, interconnectivity, power requirements, and the intended workloads.

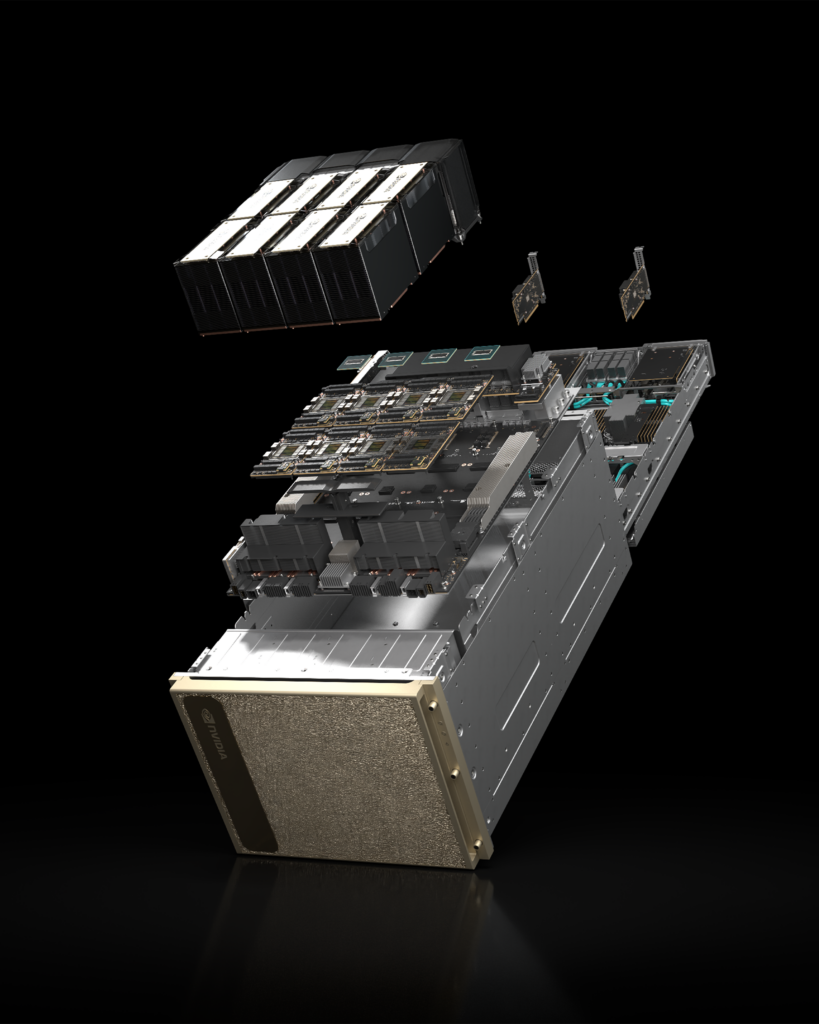

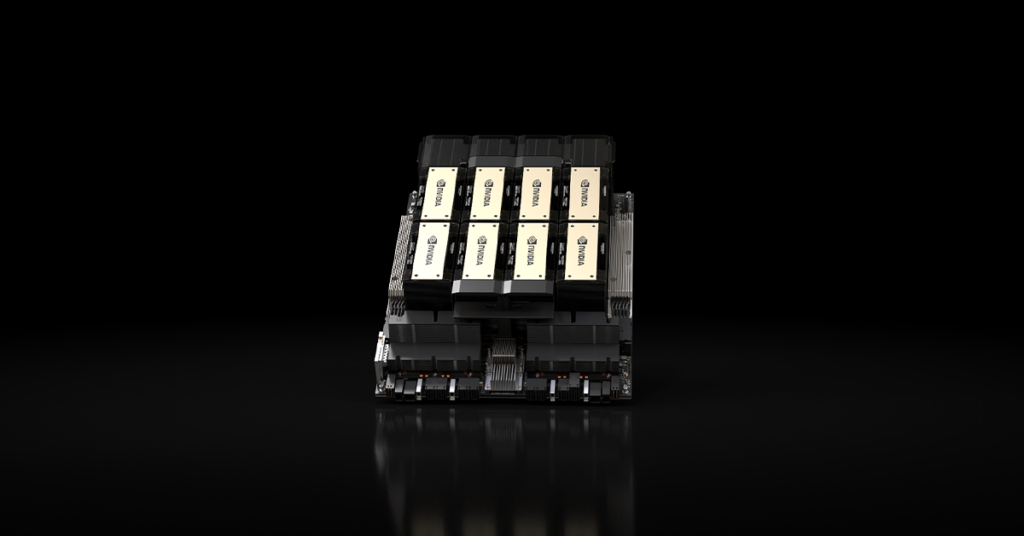

GPUs like the NVIDIA H100 are available in both PCIe and SXM, while newer flagships like the B200 are currently available only as SXM with HGX and DGX Servers.

Key differences and features

GPU Interconnects and Scalability

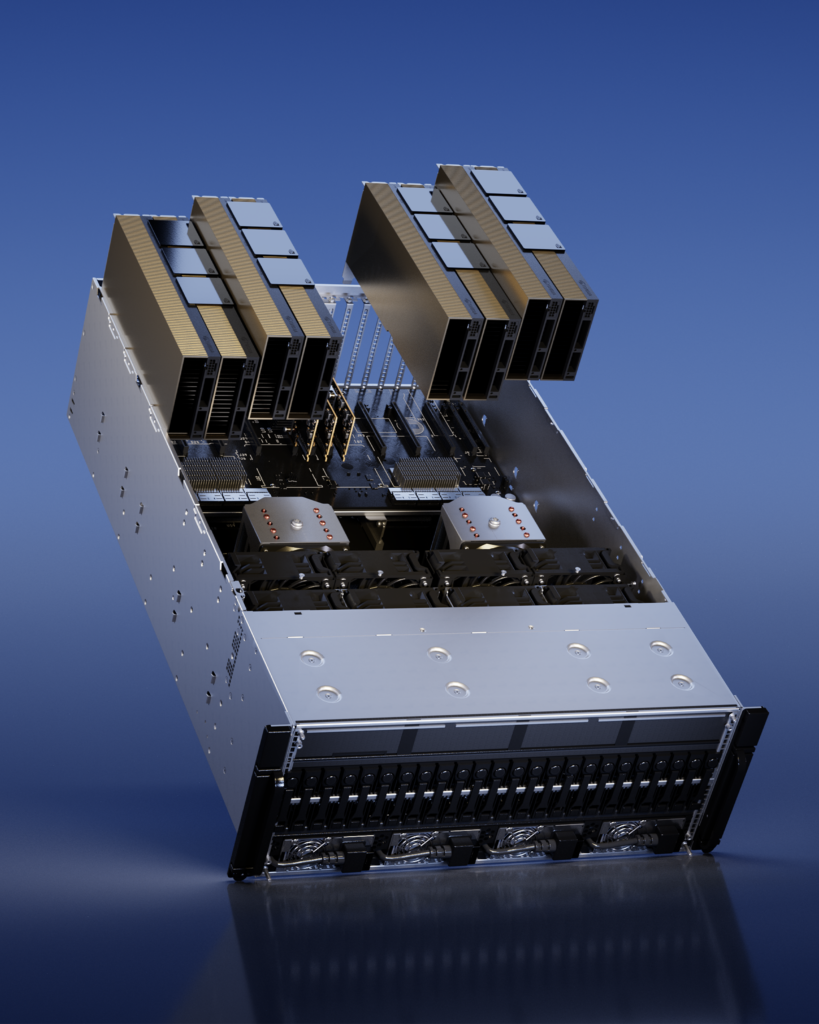

SXM GPUs use NVLink, offering up to 900 GB/s bandwidth for seamless communication between all GPUs in the same server. This significantly enhances multi-GPU workloads like AI training and HPC.

PCIe GPUs usually solely on the PCIe bus, which is adequate for less inter GPU communication-intensive tasks like inferencing or rendering. When using an NVLink bridge, they can only interconnect two GPUs instead of a full-mesh interconnect seen in the SXM version of the GPU.

For scaling across multiple servers, InfiniBand is typically used in SXM-based systems, allowing high-performance low-latency GPU clusters based on the NVIDIA SuperPOD reference architecture. PCIe systems do not typically use InfiniBand for inter-GPU scaling.

Performance and Power

SXM GPUs support higher power envelopes (700W for H100), enabling sustained higher performance and clock speeds. PCIe GPUs are limited to 350-400W, making them less power-hungry but also less capable of peak performance.

The performance difference due to the difference in power and clock speeds are outlined here: Comparing NVIDIA H100 PCIe vs SXM: Performance, Use Cases and More (hyperstack.cloud)

Compatibility

PCIe GPUs are widely compatible with standard server infrastructures, making them ideal for general-purpose data center deployments.

SXM GPUs require specialized servers and cooling solutions, increasing initial costs but offering unparalleled performance for high-end applications. SXM GPUs are not available separately and come prebuilt in Servers called HGX (if made by OEMs like Supermicro or ASUS) and DGX if made by NVIDIA themselves

| Feature | SXM GPUs | PCIe GPUs |

| GPU Interconnect | NVLink (up to 900 GB/s) | PCIe bus |

| Multi-GPU Scaling | NVSwitch + NVLink (low latency) | Host CPU coordination (higher latency) |

| Inter-Server Scaling | InfiniBand / SuperPOD | Not Available |

| Power Envelope | Up to 700W | 350-400W |

| Performance | Higher sustained performance | Moderate performance |

| System Requirements | Specialized HGX/DGX servers |

General-purpose servers |

| Cost | Higher upfront cost | Lower cost |

| Target Workloads | AI training, HPC, SuperPODs | Inference, rendering, general compute |

| Future-Ready GPUs | H200, B200 (SXM-only) | Limited to current-gen PCIe designs |

Summary

SXM GPUs are ideal for premium AI and HPC services, excelling in workloads that demand multi-GPU scalability, such as deep learning training and large-scale simulations. They leverage advanced interconnects like NVLink, NVSwitch, and InfiniBand to deliver superior performance in high-performance clusters, such as NVIDIA SuperPODs. In contrast, PCIe GPUs are better suited for cost-effective, general-purpose instances, particularly for tasks like inference, rendering, or workloads where GPUs function independently. Their lower power requirements and broader compatibility make them a budget-friendly choice for large-scale deployments.

Further Reading:

PCIe and SXM5 Comparison for NVIDIA H100 Tensor Core GPUs — Blog — DataCrunch

NVIDIA H100 PCIe vs. SXM5 (arccompute.io)