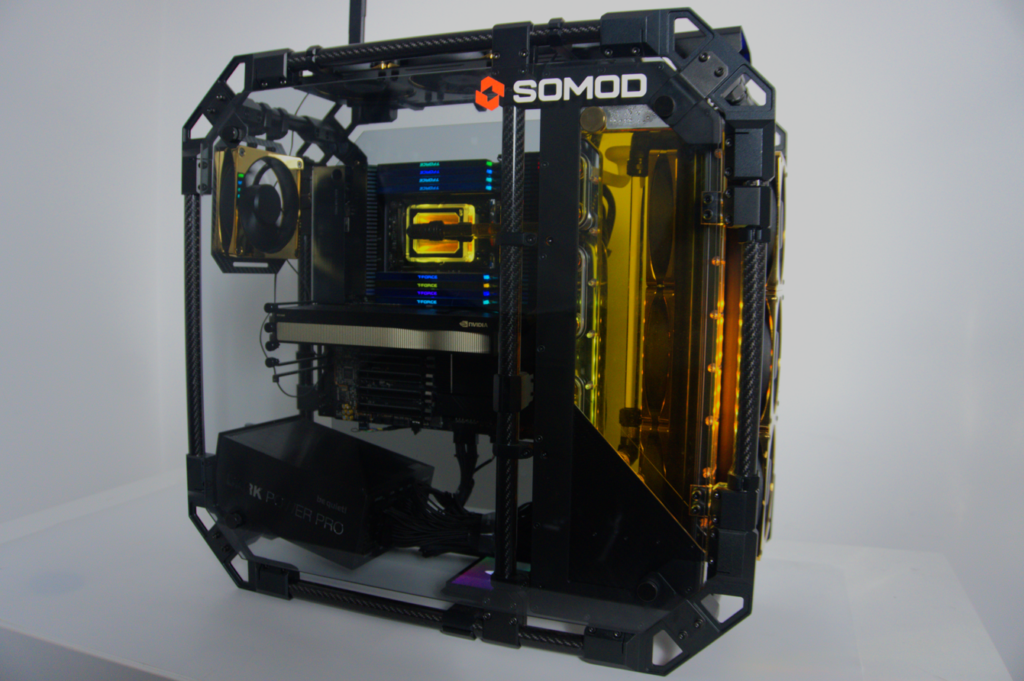

As part of the launch of SOMOD PCs, the MBUZZ Labs team tasked me with benchmarking the new SOMOD Neural PC to evaluate its AI performance. Given the workstation’s powerful specs, I set out to test its deep learning capabilities using industry-standard benchmarks.

Initial Benchmarking Roadblocks

My initial plan was to run Geekbench AI, but I quickly hit a roadblock—it wasn’t detecting the GPU, making it unsuitable for proper evaluation. Next, I considered MLPerf, one of the most recognized AI benchmarking suites. However, downloading the massive datasets required for MLPerf benchmarks would have consumed significant bandwidth and time, so I decided to look for an alternative.

That’s when I stumbled upon PyTorch’s deep learning benchmark repository on GitHub. It provided a lightweight yet effective way to test AI workloads. I made modifications to the test files and adjusted batch sizes to optimize performance for the RTX 5880 Ada GPU. With these tweaks, I finally had a solid benchmarking setup.

Hardware Specifications of the SoMod Neural PC

Here’s the hardware that powers this AI workstation:

- CPU: AMD Ryzen Threadripper PRO 5955WX (16 Cores, 32 Threads)

- Memory: 256GB RAM

- GPU: NVIDIA RTX 5880 Ada Generation (49GB VRAM)

- NVIDIA Driver: 550.120

- CUDA Version: 12.6.77

- cuDNN: Latest version included in the CUDA toolkit

- Motherboard: ASUSTeK Pro WS WRX80E-SAGE SE WIFI

- Operating System: Ubuntu 22.04.5 LTS

- PyTorch Version: 2.5.0a0+e000cf0ad9.nv24.10

Deep Learning Benchmarks: Real-World Performance

After setting up the benchmarks, I tested various AI workloads to see how well the SoMod Neural PC handled them. Here’s what I found:

1. Object Detection – SSD (Single Shot MultiBox Detector) with ResNet50 Backbone on COCO Dataset

- AMP (Automatic Mixed Precision): 324 images per second

- FP32: 184 images per second

AMP provided a 1.75x performance boost, demonstrating real-time object detection capabilities.

2. NLP – BERT on SQuAD v1.1

- FP16 (with AMP): 237 sequences per second

- FP32: 134 sequences per second

AMP delivered a 40% speedup over FP32, making it ideal for language model training.

3. Neural Machine Translation – GNMT

- FP16 (with AMP): ~133,000 tokens per second

- FP32: ~82,000 tokens per second

AMP allowed for larger batch sizes and faster translation speeds.

4. Recommendation Systems – Neural Collaborative Filtering (NCF) on ML-20M Dataset

- FP16: ~22.1 million samples per second

- FP32: ~21.5 million samples per second

Both precisions performed similarly, showing that precision optimizations aren’t always necessary for recommendation models.

5. Image Classification – ResNet50 on Synthetic ImageNet Data

- AMP: 1,113 images per second

- FP32: 624 images per second

With nearly 2x the speed of FP32, AMP is the best choice for high-speed training.

Benchmark Results: The Numbers Speak for Themselves

| Model | Precision | Key Metric | Value | Notes |

|---|---|---|---|---|

| SSD (ResNet50 Backbone) | AMP | Images/Second | 324 | Real-time object detection capabilities. |

| SSD (ResNet50 Backbone) | FP32 | Images/Second | 184 | The FP32 precision result shows half performance if compared to AMP. |

| BERT (Base, SQuAD) | FP16 (with AMP) | Training Sequences/Second | 237 | |

| BERT (Base, SQuAD) | FP32 | Training Sequences/Second | 134 | 40% lower performance compared to FP16 with AMP. |

| GNMT (Translation) | FP16 (with AMP) | Tokens/Second (Training) | ~133,000 | Effective mixed precision allows larger batch sizes and faster runs. |

| GNMT (Translation) | FP32 | Tokens/Second (Training) | ~82,000 | |

| NCF (Recommendation) | FP16 | Training Samples/Second | ~22.1 Million | |

| NCF (Recommendation) | FP32 | Training Samples/Second | ~21.5 Million | Results nearly on par with its FP16 counterpart. |

| ResNet50 (ImageNet) | AMP | Images/Second | ~1113 | AMP provides nearly double the performance compared to FP32. |

| ResNet50 (ImageNet) | FP32 | Images/Second | ~624 |

Final Thoughts: Why the SoMod Neural PC is a Game Changer

🔹 AMP Optimizations Deliver Significant Speed Gains – Automatic Mixed Precision enhances performance without compromising accuracy.

🔹 High Memory Capacity – The 256GB RAM enables large batch sizes and efficient training.

🔹 Unrivaled AI Performance – The Threadripper PRO CPU + NVIDIA RTX 5880 Ada GPU combo ensures unmatched deep learning power.

🔹 AI-Ready Software Stack – With CUDA 12.6, cuDNN, and PyTorch optimizations, the system is future-proof for next-gen AI workloads.

Need a High-Performance AI Workstation?

If you’re looking for a powerful deep learning machine that can accelerate your AI workloads, the SoMod Neural PC is the ideal choice.

➡️ Order yours today at somodsystems.com and experience next-level AI computing! 🚀